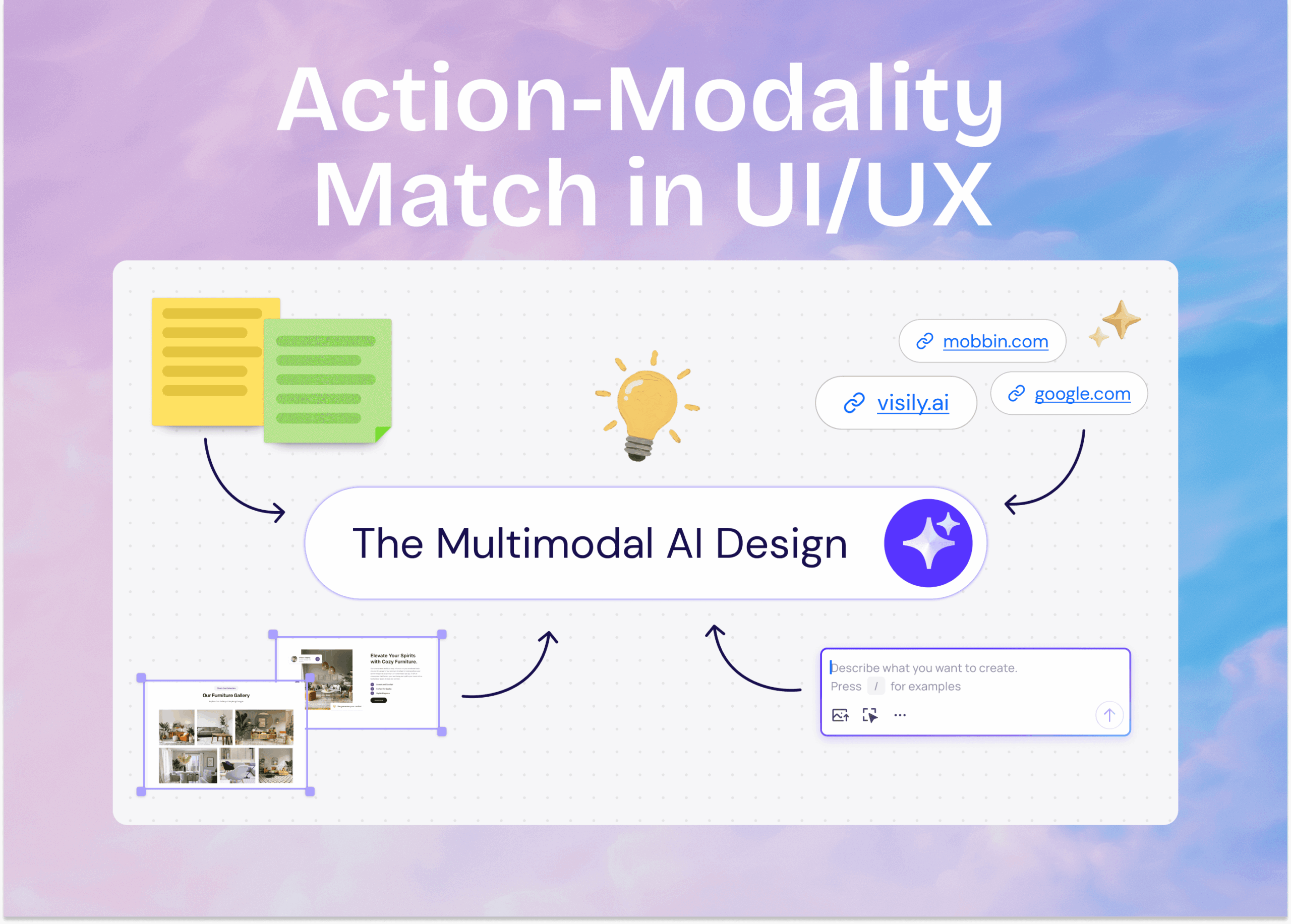

TL;DR: Action-Modality Match is a software UX principle that states that users should be able complete an action in the way (or “modality”) that feels most natural and intuitive to them. Achieving Action-Modality Match often means promoting the most natural ways to accomplish an action, as opposed to merely supporting it. A common example of Action-Modality Match would be encouraging users to use natural language text prompts to apply changes across many instances of an object (columns in a spreadsheet, for example), or promoting non-AI features, such as drag-and-drop elements, for simple, one-off tasks (where text prompts may be slower and less precise).

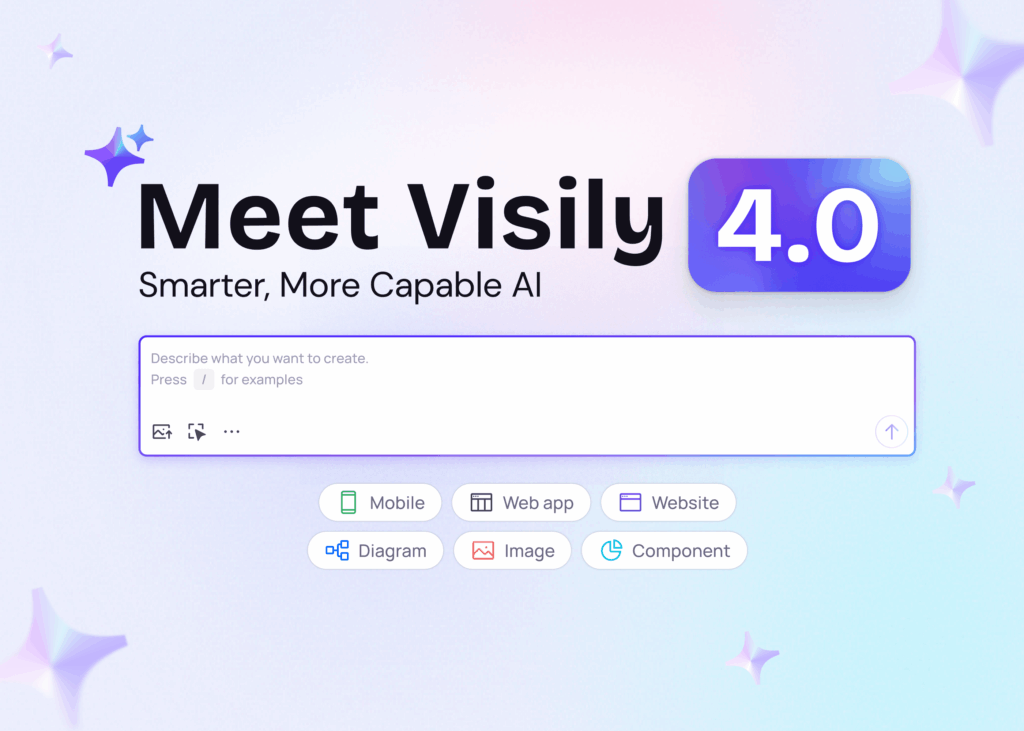

Most AI design tools still force you to start one way: write a prompt, or upload a file. But real product work is messy — half a Slack note, a competitor screenshot, a URL from your PM. Action-Modality Match is Visily’s approach to multimodal AI design, letting you start from anything (text, image, URL, even a combination) and move faster from idea → wireframe → prototype.

Introduction: Real-world work is multi-modal

Despite our attempts to systematize and normalize workflows, experience show that the ideas, artifacts, and inspiration used in everyday work come in a myriad of forms:

- Screenshots of competitor apps

- Notes written in Jira

- A Slack conversation between colleagues

- A sticky-note-laden digital whiteboard

Some teams manage to create a repeatable process that brings all these inputs together; others rely on ad-hoc ways of working. But this becomes more complicated when we try to bring AI into the mix. To generate useful outputs—like an app prototype or a PRD—AI needs to do the same things humans do: synthesize, organize, and discard information appropriately. That’s only possible if the AI tools can accept different kinds of inputs, just like teams already do in their natural workflows.

Beyond merely supporting different input types (referred to as “multi-modality”), AI-enabled tools need to offer the right tools for the job, which are often simple features commonly found in most software: drag-and-drop interfaces, toolbar options, and presets or templates.

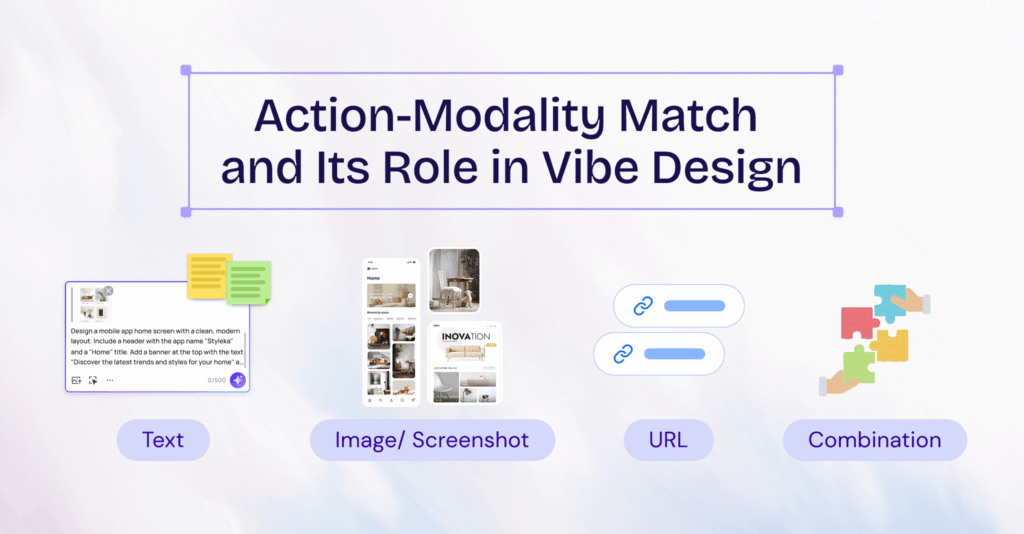

For software to support multi-modal work it must provide (1) multiple input modalities, such as natural language text prompts and screenshots, as well as (2) multiple modalities of control, such as AI, drag-and-drop UI, multi-select options. Combined, these form the basis of Action-Modality Match.

Modalities of input vs. modalities of control

Input modalities and control modalities are distinct in their purpose. Input modalities are about what you feed into the system: natural language text prompts, screenshots, competitor links, sketches, or sticky notes. In each of these instances, you’re using AI to transform or generate an output based on the input. Control modalities, on the other hand, are about how you achieve your goal: do you need AI at all? Would another feature set make it easier or faster to accomplish the task? Consider a few examples:

- AI is the better control when you want to turn a sketch into a wireframe, reformat a messy block of requirements into a draft PRD, or generate multiple variations of a design concept. These are tasks where automation saves enormous time.

- Direct manipulation is better when you just need to move a button a few pixels to the right, resize a container, or adjust the spacing on a grid. Explaining those tweaks to AI would be slower and more frustrating than simply dragging and dropping.

- Presets and templates shine when you’re applying common patterns: adding a login flow, dropping in a dashboard layout, or using a consistent color scheme across screens. These are moments where speed and consistency matter more than creativity.

For software to truly support multi-modal work, it must provide both:

- Multiple input modalities (text, screenshots, sketches, links, etc.)

- Multiple control modalities (AI, drag-and-drop, batch operations, presets, etc.).

Beyond providing multiple control modalities, software should encourage the right control modality for the desired action. This is especially true in the age of AI-enabled software that often provides a seemingly endless number of ways to achieve an outcome.

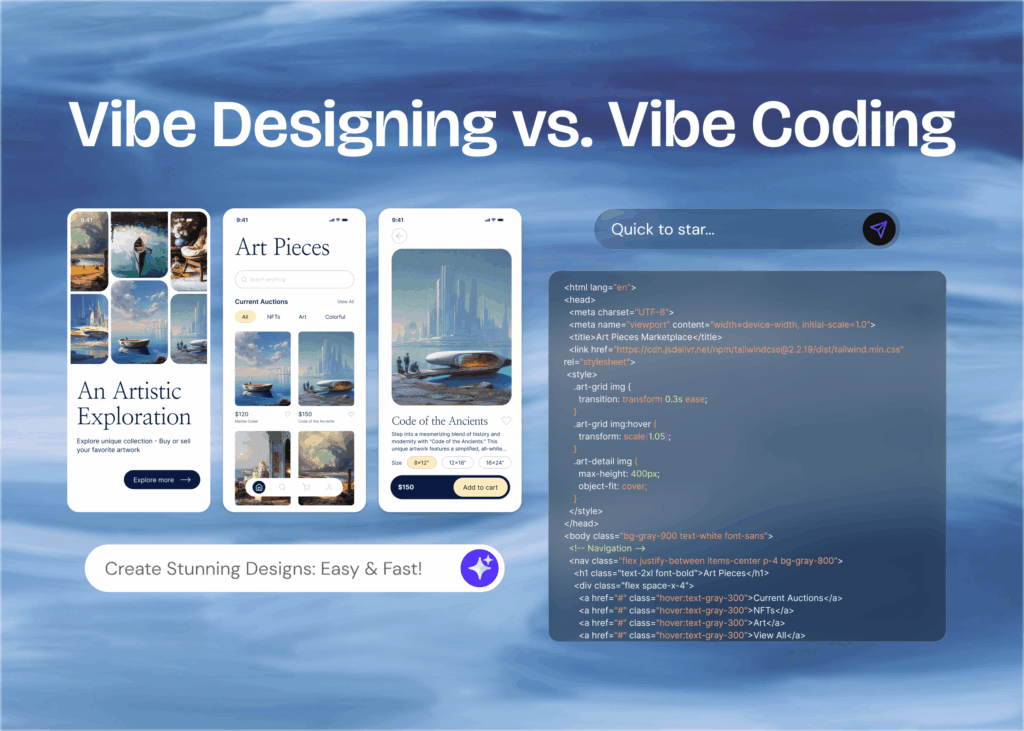

Action-Modality Match and Its Role in Vibe Design

We’ve established that Action-Modality Match is another way of stating that AI-enabled tools should adapt to the ways users work. This is a key piece of the vibe design workflow, it’s what lets teams move from prompt to prototype faster, without being blocked by missing assets.

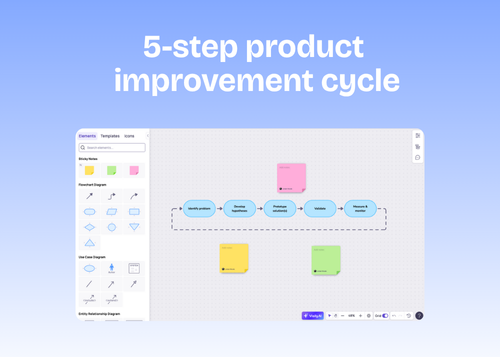

We apply this principle in Visily by first considering all the core actions necessary for creating UI assets:

- Ideating: Sourcing ideas, images, and other resources; creating diagrams and notes.

- Initial asset generation: Using inspiration to create initial UI or components.

- Collaborating: Presenting, getting feedback, and inviting collaborators.

- Modifying: Updating assets and generating new or multiple versions.

- Handing off: Delivering outputs to team responsible for next steps.

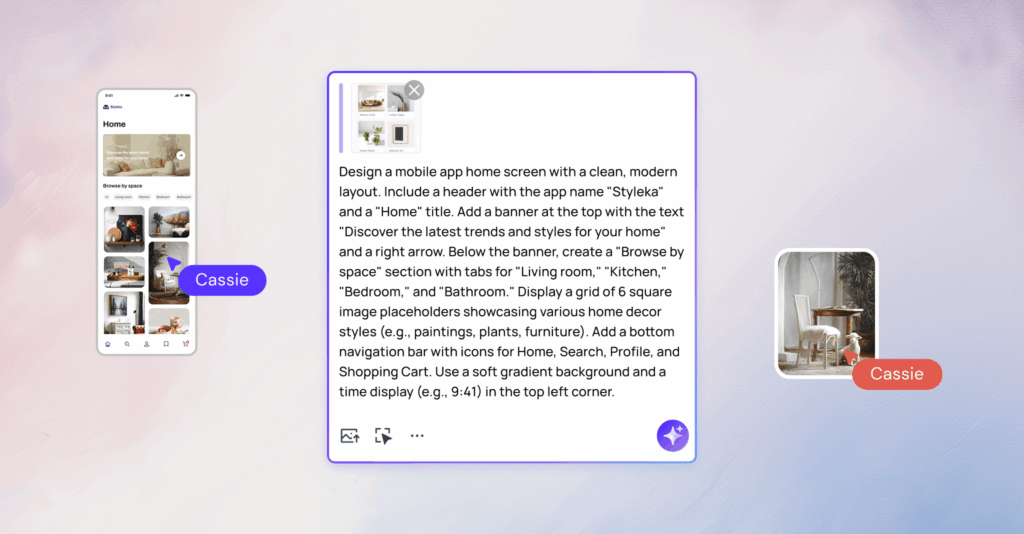

In each of these steps, we identify the most common user flows. For example, our data shows that Visily users are unlikely to create the initial version of their design by simply dragging squares and rectangles onto the canvas. Instead, they’re most likely to use a text prompt or screenshot inspiration. This makes sense: one of Visily’s primary value propositions is that anyone can start from any source of inspiration, which is especially attractive to non-designers who are intimidated by a blank canvas. To encourage users down the “happy path”, we deliberately redesigned the initial generation experience around our multi-modal AI chat assistant, with subtle examples of the types of inputs the user might use.

In contrast, it’s much easier and more natural to drag an element around the canvas to move it than it is to describe the target placement of the element. Because of that, we prominently show an editing panel on the left-hand side of the screen, suggesting to users that simple modifications can be achieved with drag-and-drop elements.

There are many other examples where a specific modality simply makes more sense than others:

- Typing when words come faster than visuals, or you don’t have a source image depicting your idea.

- Inputting screenshots of screens or flows you want to emulate.

- Using a pre-existing template for components you frequently need.

- Pasting a URL of a page whose aesthetic you want to adopt in your design.

Our POV: Real product teams rarely start with a perfect spec. Tools should embrace that chaos and transform whatever you have into something usable — instantly.

Examples: When Each Modality Wins

| Modality | Best Use Case | Why It Works |

| Text | New feature ideas, flow descriptions, empty-state brainstorming | Forces clarity of intent and speeds up ideation |

| Image / Screenshot | Competitor analysis, redesign projects, UI teardown | Captures visual structure without manual recreation |

| URL | Benchmarking, quick imports for marketing or onboarding flows | Copies real layouts in seconds and makes them editable |

| Combination | Strategic planning sessions, stakeholder reviews | Produces richer prototypes by layering context (e.g., text for flow + screenshot for layout) |

How It Works in Practice

In Visily, action-modality match is built into the core workflow:

- Start from anything: Paste a screenshot, select a screen, or type a short brief — no need to decide up front.

- AI does the heavy lifting: Instantly converts your input(s) into editable wireframes.

- Iterate visually: Drag, drop, and refine without prompt fatigue.

- Combine modalities midstream: Add text after importing a screenshot or vice versa.

This flexibility eliminates “blank canvas anxiety” and speeds up decision cycles — perfect for PMs, engineers, and founders who need alignment fast.

Designing for Multiple Modalities

To get the most out of multimodal UX:

- Give context: If using text, specify goal + audience. If using a screenshot, note what you want to keep or change.

- Layer, don’t restart: Add new modalities as you iterate instead of starting over from scratch.

- Resolve conflicts: If your text says “minimalist” but your screenshot is colorful, clarify priority: “Use this layout but apply a minimalist style.”

These best practices reduce rework and produce stronger first drafts.

Future Trends: Where Multimodal AI Is Headed

Multimodal design won’t stop at text, image, and URL:

- Voice: Describe flows verbally and watch them appear.

- Gestures: Roughly sketch on a touchscreen and let AI clean it up.

- 3D & AR: Generate immersive prototypes for spatial experiences.

Our bet? Soon, modality choice will disappear as a concept. Design will feel like a conversation where you can show, tell, and refine in the same space, leading to advancements in multimodal AI.

Want to Go Deeper on Vibe Design?

Action-modality match is just one part of the vibe design workflow. To see how it fits into a full AI wireframing process — from idea capture to stakeholder-ready prototype — check out our guide to vibe design.

Conclusion: From Input Chaos to Design Flow

Action-modality match isn’t just a feature — it’s a new default for AI wireframing and prototyping. By letting you start from text, screenshots, or URLs (or all at once), it:

- Removes friction from starting design work

- Increases participation across roles

- Gets teams to usable prototypes faster

Want to try it yourself? Open Visily and start from whatever you have — a line of text, a competitor screenshot, or a link — and turn it into a wireframe before your next meeting ends.

FAQs: Multimodal AI UX & Action-Modality Match

1. What is multimodal AI UX?

It’s a design approach that lets you interact with AI using multiple input types — text, images, URLs — instead of just one. This creates a more natural, flexible workflow that matches how teams think and work.

2. How does action-modality match fit in?

Action-modality match refers to aligning the input (text, image, URL) with the task you are trying to accomplish. This concept ensures that you begin your work in a way that feels most natural and intuitive, allowing you to avoid unnecessary delays and streamline your efforts.

Action-modality match turns vibe design from a buzzword into a repeatable practice, helping teams collaborate better and ship faster.

3. Why is this better than just writing prompts?

Because not every idea is best described in words. Screenshots capture visual layouts faster. URLs copy real-world pages instantly. Combining modalities gives richer context, which leads to better AI outputs and fewer re-iterations.

4. What if my inputs conflict (e.g., text says ‘dark mode’ but screenshot isn’t)?

Clarify your priority in your follow-up input (e.g., “Use this layout but apply dark mode”). In Visily, you can regenerate sections or restyle components without losing your base layout.

5. Who benefits most from multimodal workflows?

PMs and founders get faster alignment, engineers can validate flows before coding, and designers get a head start instead of starting from scratch. Everyone spends less time describing and more time iterating.

6. Is this just for early-stage design?

No — multimodal inputs also speed up redesigns, competitive teardowns, and A/B testing flows. Think of it as a faster way to get every stakeholder on the same page, at any stage of product development.